blog > wuu2?

I hate that you've got to do disclaimers before talking about anything AI (and this is not an AI blog / site), but here goes:-

- I don’t like AI as a social technology, particularly as an accountability sink (read Dan Davies - the unaccountability machine), as a way of constructing alternative authority sources (which support the current fascist project), and as a labour issue.

- I think it’s being massively overapplied right now, is extremely obnoxious, and has the potential to make some very dangerous mistakes.

- I don’t think it’s as useless as crypto / metaverse is, and some criticisms of those technologies have been transferred rather uncritically over. See also that I think the environmental impacts are real, but likely overexaggerated, or at least not put into context of the broader emissions / water impact of tech / the internet at large (which is substantial!).

- I’m not convinced that AI has interiority in any meaningful way, but I think we should be more careful about our confidence in what consciousness actually is (read blindsight!) before making grand claims about it. I think AI is actually quite disturbing philosophically, and suggests that you might not actualy need interiority to reason ~like a human~ - p-zombies &c.

- If you come to me with a moral panic about AI art, or talking about *souls*, then you should get a fucking grip.

- I don’t think it’s going to end the world. If it is, there’s fuck all I can do about it, so I’ll revert back to my previous position of not worrying about it.

Anyway, the main thing is this:-

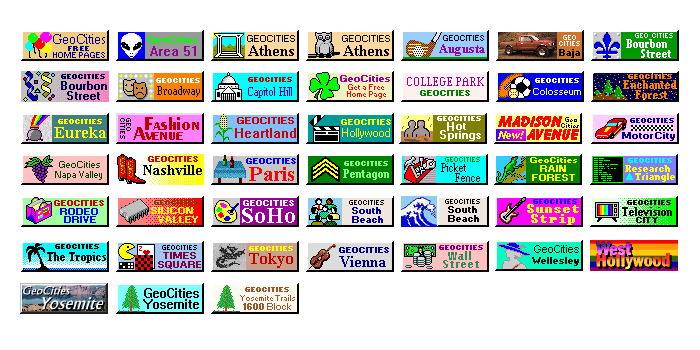

’Geocities is organized into 33 neighborhoods like ''Wall Street''; ''Motor City,'' for vehicle enthusiasts, and ''Area 51,'' which deals with science fiction. New members, known as ''homesteaders,'' pick a neighborhood according to their interests and set up a Web page as a cyberhome. Someone interested in movies, for example, could move into ''a lot'' in ''Hollywood.'’

God, there’s so much there!

location, location, location...

Straightforwardly, it’s essentially an early ancestor of modern tagging systems. The ‘homesteading’ language raises a pretty significant eyebrow for our modern (gay, woke, etc) eyes. But what’s interesting about it is how it works as a spatial, ‘real world’ metaphor, to introduce people to technology that it might be their first time using. Like, you’ve got this internet thing, which you don’t really understand, so you say ‘look, it’s just like a city, and you move into the city which reflects your interests, and then your website is like your apartment in the city’.

(Incidentally, this maybe (?) shows a time before the reactionary hysteria around cities really took off. If you tried this metaphor with something now you’d have a million people shouting at you on X - The Everything App about how London and New York have been taken over by muslim homeless people and going on the tube makes them scared).

And I feel like you see this kind of thing a lot on the early internet? Like, skeuomorphic design is another one. You’ve got this weird new thing called an ‘eye - phone’. What can it do? Well, there’s a button here which looks like a camera, and a button here that looks like a journal, and a button here which looks like a TV (that was youtube!). This maps to your everyday experience of the world, lowers the barrier to entry, and is the first domino in a chain that ends with you sitting on your sofa in 2025 watching instagram sludge.

This is all kinda whatever, but my point is, this might not have really felt like a metaphor at the time. If it’s your first experience with new technology, then you assume that this is just what an iPhone, or the internet, is. It’s only once you’re on board that design starts to change assuming you’re familiar with the technology, and other incentives can go wild.

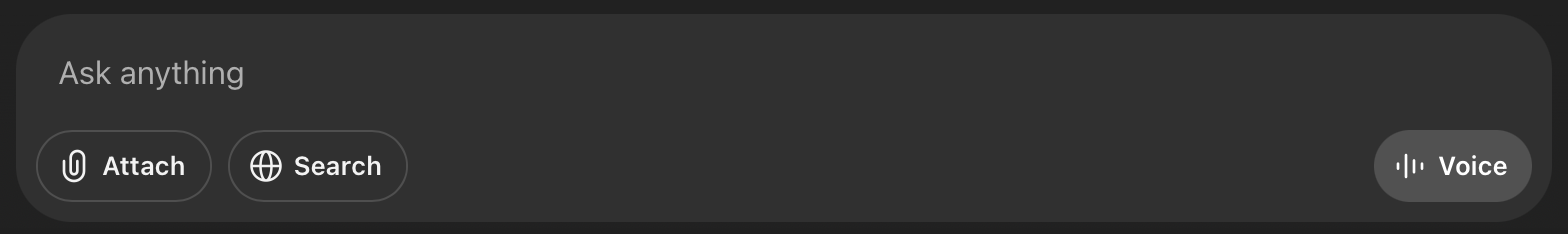

Which makes you wonder, what technologies feel natural to us right now, which are essentially metaphors? Open question, but I kinda gave the game away at the start - I think an obvious one is the form of the AI chatbot.

Nostalgebraist’s fantastic essay ’The Void’ looks at Anthropic’s 2021 paper “A General Language Assistant as a Laboratory for Alignment” which essentially created the AI chatbot ‘species’.

The authors describe a “natural language agent” which humans could talk to, in a chat-like back-and-forth format.They report experiments with base models, in which they fed in fragments intended to set up this chat context and provide some characterization for the “agent” that the human is “talking to”. […]

If you take the paper literally, it is not a proposal to actually create general-purpose chatbots using language models, for the purpose of “ordinary usage.” Rather, it is a proposal to use language models to perform a kind of highly advanced, highly self-serious role-playing about a postulated future state of affairs. The real AIs, the scary AIs, will come later (they will come, “of course”, but only later on).

Essentially, that ‘form factor’ (I guess?) of back and forth chatting wasn’t originally conceived as a way of bringing AI into the public sphere - it was a proposal for testing alignment, heavily influenced by science fiction. Thinking about that idea of ‘metaphors’ for new technology, it feels possible that the chatbot form took off because it maps very well on to how people already interact with technology - we send hundreds of messages a day, in that back and forth format. That format has evolved over time and is now pretty efficient for what it does.

If you take the paper literally, it is not a proposal to actually create general-purpose chatbots using language models, for the purpose of “ordinary usage.” Rather, it is a proposal to use language models to perform a kind of highly advanced, highly self-serious role-playing about a postulated future state of affairs. The real AIs, the scary AIs, will come later (they will come, “of course”, but only later on).

That form might have helped it take off (and influenced the Anthropic paper as well), but is it a metaphor in the same way that GeoCities cities were a metaphor?

I mean, maybe?

There is an underlying technology (html & http, smartphones, the ‘base model’), and there’s a metaphorical something built on top to facilitate easier understanding of and interaction with the underlying technology (‘cities’, 'realistic design' the chatbox).

When I see articles like this, I think, yeah, people are lonely and isolated, and used to talking to people through that chatbox form (sometimes moreso than in person). AI is very good at mimicking a person and reflecting your own thoughts back to you, which is bad news if you’re kinda unstable at that point.

heyyyy bestie!

But is that made worse by the use of the chatbox form? If all my caveats hold up and AI sticks around as a strange presence of modern reality, but doesn’t go skynet for no reason (the ‘nothing ever happens’ position), then could these impacts be mitigated by varying how we interact with it?

Obviously there’s financial incentives against that, (though OpenAI apparently loses money on every prompt, so maybe they’re not that strong), but idk. It seems more hopeful than arguing that we need to somehow hobble AI’s capabilities, or tweak the training, or just accept that this happens now.

On the flip side, I have been worried about how AI trains us to think about other people. If you’re used to talking to people in that chatbox form (and there’s already less inhibitions against being a cunt there than face to face), and then you’re regularly interacting with something else through that chatbot form, and you take the (more healthy!) view that this isn’t something with interiority - but just acts like it - how much does that impact your perception of other human being’s interiority? How much easier does it make it to transfer that thinking over and dehumanise them? Again, we’re in a broadly fascist cultural moment, and it’s not like many people need a lot of prompting to think of others as inhuman.

idk. i should write more about the alternative authority sources thing - see musk training grok to talk about white genocide in south africa. god all those words are horrible to say. what an aesthetically empty time we live in. sorry to end on such a downer. welcome to my blog!